Mechanical keyboards have swept the mainstream in recent years, saving typists and gamers alike from the scourge of cheap, mushy typing. However, a secret third option might prove to be even better. Most keyboards, from those found on the best Windows laptops to Apple’s Magic Keyboard for iPad Pro or Mac, are membrane keyboards which use a rubber or silicone layer under the keys to register presses. Mechanical keyboards, on the other hand, use discrete, customizable switches for each key. In addition to being relatively cheap, the best mechanical keyboards can turn typing into a pleasure rather than a chore. But lately, you may have heard about the new kid on the block: Hall effect keyboards. Although they started out as gaming-centric products, they have also lately become great options for everyday typing.

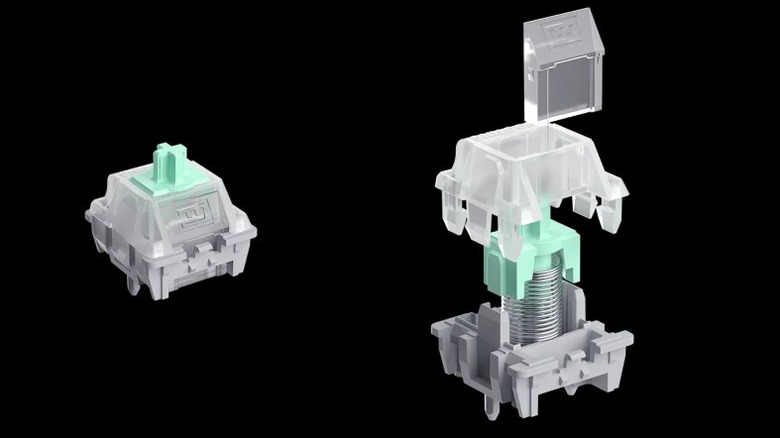

Regular mechanical keyboard switches have been around since the early days of personal computing. They contain springs that keep two metal contacts separated, completing a circuit when the user presses down. Hall effect switches, by contrast, use magnetic fields. Each switch has two magnets facing each other, and as they get closer during a keypress, they generate a voltage field. This means Hall switches don’t need contact to actuate a keystroke, and they can also sense exactly how far down they’re being pressed. The actuation point can therefore be customized, enabling some very cool applications, especially for video gaming.

Although they’ve seen the most prominence in the gaming segment of the keyboard market, Hall effect keyboards are becoming more common among the average typist. So, are these futuristic input devices ready for prime time, or should the typical consumer still opt for a mechanical keyboard? Here’s what you need to know.

>>>C31N2104 Battery for Asus T3300K Soft Keyboard

Hall effect keyboards are great for gaming and getting better for everyday use

As mentioned, Hall effect keyboards have seen the widest adoption among the gaming crowd, but some of the benefits for gaming also translate into everyday use. The ability to fine tune the actuation point — how far down you need to press a key before it registers as an input —means you can pull of some seriously advanced moves in competitive games. Last year, we reported why some gamers called Razer’s Snap Tap feature cheating, since it allowed keyboards, especially those with Hall effect and optical switches (which are similar but use light instead of magnets), to trivialize strafing techniques that require hours of practice on mechanical switches. That same feature could make typing easier for speedy typists who often find themselves typing letters too quickly and end up having to backspace constantly.

If you’re getting spacebar bounce and accidentally adding extra spaces when you type, adjusting the actuation point further down can eliminate those errors. If you want to get even more advanced, many Hall effect keyboards allow multiple actuation points on a single key. This allows gamers to bind multiple controls to a single key, but for everyday use, you could arrange a slight press of the backspace key to erase one letter at a time, while a full press erases an entire line.

Another benefit that shouldn’t be overlooked is that, since the magnets in a Hall switch never touch each other, they don’t wear out nearly as quickly as mechanical switches which make physical contact with each keystroke. If you’re someone who types often (for example, a writer, secretary, or receptionist), using Hall switches could save you money in the long run.

>>>A1645 Battery for Apple Magic Trackpad 2 Keyboard Control

Are Hall effect keyboards comfortable for everyday use?

Although some of the unique features enabled by Hall effect keyboards may be great for gaming and useful for the average user, there’s a reason most non-gamer keyboard enthusiasts haven’t bought them. In general, gaming keyboards have different priorities than normal keyboards. While gaming, users press a few specific keys at precise times, whereas everyday users are typing emails and documents using the entire keyboard. Therefore, great gaming performance often comes at the expense of the typing experience, where users take advantage of the entire keyboard. However, as more companies adopt Hall effect technology, there’s been an increased focus on making them feel great for everyday use.

Hall effect keyboards like the Keychron K2 HE which aim to bridge the gap between gaming performance and typing comfort are beginning to proliferate, and they’re drawing praise from keyboard experts outside the gaming world. SlashGear gave the Keychron Q1 HE a perfect 10/10 in its review, finding it to have a delightfully smooth typing feel and excellent typing accuracy thanks to its Hall switches. Offerings like the Nuphy Field75 HE have garnered great reviews, too. Surprisingly, even keyboards from gaming brands like Wooting are drawing praise from typists.

If you’re not a gamer and you’re looking for the best typing experience, the most reliable mechanical keyboard brands still offer far more plentiful choices than you’ll find from brands that offer Hall effect keyboards. However, if you want a keyboard that can deliver the best of both worlds, Hall effect keyboards are worth considering.

To demonstrate this, I took a photo of a swan and then upscaled it (which ate into my CFExpress card’s memory), which you can see in the gallery above.

To demonstrate this, I took a photo of a swan and then upscaled it (which ate into my CFExpress card’s memory), which you can see in the gallery above. Here are a couple more photos for you to feast your eyes on. Remember the 1,054 focus points I mentioned earlier? The EOS R5 Mark II uses that many to accurately track birds, animals, humans and vehicles. I was highly impressed by how quickly the camera locked onto both subjects in the gallery above.

Here are a couple more photos for you to feast your eyes on. Remember the 1,054 focus points I mentioned earlier? The EOS R5 Mark II uses that many to accurately track birds, animals, humans and vehicles. I was highly impressed by how quickly the camera locked onto both subjects in the gallery above.