Microsoft 365 is available in free and paid versions, so we’ve explored the differences and discovered which could be the best option for you

(Image credit: Claudio Scott / Pixabay)

Microsoft365 is available in lots of different guises for home and business users, but these productivity packages can get expensive – so it’s no surprise that lots of people turn to Microsoft 365’s free version instead.

There are usually compromises when it comes to free versions of paid apps, though. That means it can be difficult to know if these versions are worth the download or if you should just stump up the cash for a paid version instead.

To stave off the confusion, we’ve weighed up the free and paid versions of Microsoft 365 to find out exactly what you get with the free app – and to see how the paid products go further.

Microsoft 365 free: what’s included?

Microsoft 365 offers a free version designed for users who need basic functionality without the cost. This version, accessible online via Office.com, allows individuals to utilize stripped-down editions of popular applications such as Word, Excel, PowerPoint, and OneNote directly from their web browsers. These basic features are sufficient for light document creation, spreadsheet management, and presentations, making it an excellent option for occasional users or those with simple needs. Microsoft 365 provides a free version designed for users who need basic functionality without any cost. This version is accessible online via Office.com and allows individuals to use simplified editions of popular applications such as Word, Excel, PowerPoint, and OneNote directly from their web browsers. These basic features are adequate for light document creation, spreadsheet management, and presentations, making it a great option for occasional users or those with simple needs.

In addition to the free version, Microsoft offers a 30-day free trial of the entire Microsoft 365 suite. This trial enables users to explore the full range of premium applications and services, which include advanced features like real-time collaboration in Word, Power BI for data analysis, and sophisticated design tools in PowerPoint. During the trial, users can also take advantage of extensive cloud storage provided by OneDrive, allowing them to access files from any internet-enabled device.

Furthermore, Microsoft 365 Education is free for students and educators, subject to eligibility verification through participating educational institutions. This version includes additional tools designed for learning, such as Microsoft Teams for classroom collaboration and Intune for Education to manage devices securely. By utilizing these resources, educators can create a more interactive and engaging learning environment, while students gain exposure to essential software that can support their academic and future professional endeavors.

In addition to the free version, Microsoft offers a 30-day free trial of the entire Microsoft 365 suite. This trial allows users to experience the full range of premium applications and services, including advanced features such as real-time collaboration in Word, Power BI for data analysis, and sophisticated design tools in PowerPoint. During the trial, users can also explore the extensive cloud storage offered through OneDrive, which enhances productivity by enabling file access from any device with internet connectivity.

Moreover, Microsoft 365 Education is free to students and educators, subject to eligibility verification through participating educational institutions. This version offers access to additional tools tailored for learning, such as Microsoft Teams for classroom collaboration and Intune for Education to manage devices securely. By leveraging these resources, educators can create a more interactive and engaging learning environment, while students gain exposure to essential software that can support their academic and future professional endeavors.

>>>S1S-0000330-D22 Battery for MSI Summit E13FlipEvo A11MT-205RU-WW71185U16GXXDX10P

Microsoft 365: should you pay?

Whether to pay for Microsoft 365 depends on your needs, usage habits, and specific requirements.

A paid subscription may be worthwhile if you require regular access to the complete suite of desktop applications—such as Word, Excel, and PowerPoint. These desktop applications offer advanced features, extensive formatting options, and greater functionality than their online counterparts. Additionally, a subscription includes services like OneDrive, which provides cloud storage for your files, allowing you to access them from any device and collaborate seamlessly.

On the other hand, if your needs are more basic, and you primarily use the online versions of these applications for simple tasks like drafting documents, creating spreadsheets, or making presentations, the free version of Microsoft 365 might be sufficient. The online applications are user-friendly and accessible, though they may lack some advanced features in the paid version.

It’s also important to consider your budget. Subscriptions can range in price depending on your chosen plan, so reflect on whether this cost aligns with your financial goals. Furthermore, think about how frequently you will use the software. If you only need it occasionally, a pay-as-you-go approach or sticking with free alternatives could be a more economical choice. Ultimately, evaluating these factors will help you make an informed decision that best suits your needs.

>>>A3HTA022H Battery for Microsoft Surface Duo

Microsoft 365: business versions

Microsoft 365 provides a comprehensive suite of business plans tailored to address the diverse needs of organizations of all sizes. These plans typically include essential productivity applications such as Word, Excel, PowerPoint, and Outlook, serving as the backbone for daily operations. In addition to these core tools, Microsoft 365 enhances business functionality with features like business-class email, which includes custom domain names and advanced calendar sharing, facilitating professional communication.

One of the standout offerings is OneDrive, which provides secure online storage and file-sharing capabilities. This empowers teams to access documents from anywhere, collaborate in real time, and ensure data safety with automated backup features. Complementing these tools is Microsoft Teams, a versatile platform that fosters seamless collaboration through chat, video conferencing, and integrated file sharing, making remote work and communication efficient.

Moreover, organizations can use advanced security features to safeguard sensitive data from threats, depending on the selected plan. Features like Multi-Factor Authentication (MFA), advanced threat protection, and data loss prevention help businesses protect their information and maintain compliance with industry regulations.

Specialized applications, such as Access for database management and Publisher for professional-quality publications, are also available for those requiring tailored solutions. With varying tiers, businesses can select a plan that best suits their size, budget, and specific requirements, ensuring they have the tools to boost productivity, enhance collaboration, and maintain a competitive edge in their industry.

Microsoft 365 represents a versatile and scalable solution for modern businesses, ensuring organizations can thrive in a digital workspace.

>>>BV-F4A Battery for Microsoft BV-F4A

Microsoft 365 paid and free versions: what should you use?

When deciding between the paid and free versions of Microsoft 365, it’s essential to evaluate your specific needs and how you plan to use the software.

If your primary focus is on basic document editing and you primarily access Microsoft Word, Excel, and PowerPoint for quick tasks, the free version available at Office.com may be all you require. This option provides online access to essential features, making it convenient for casual users or those who need to create and edit documents occasionally. The free version is ideal if you don’t need advanced functionalities, offline access, or extensive cloud storage.

However, if your work demands a more robust suite of tools, the paid Microsoft 365 subscription may be a better fit. The paid version offers the full desktop applications, ensuring you have access to the complete range of features, including advanced editing tools, formatting options, and powerful data analysis capabilities in Excel. Additionally, it includes business-class email through Outlook, making it suitable for professionals who need reliable communications.

One of the significant advantages of a paid subscription is the increased cloud storage via OneDrive, allowing you to store, share, and collaborate on documents seamlessly. Moreover, features like Microsoft Teams facilitate effective communication and teamwork, especially for those working in larger organizations or on group projects.

Consider your usage patterns, the complexity of your projects, and whether you often require offline access to documents when weighing your options. For instance, frequent travelers or remote workers might find offline functionality crucial, which is only available with a paid subscription.

In summary, the free version is fantastic for casual users or students, while the paid plans cater to professionals, business users, and anyone who requires a comprehensive suite of productivity tools. Make your choice based on how frequently you’ll use the software and the level of functionality that best suits your needs.

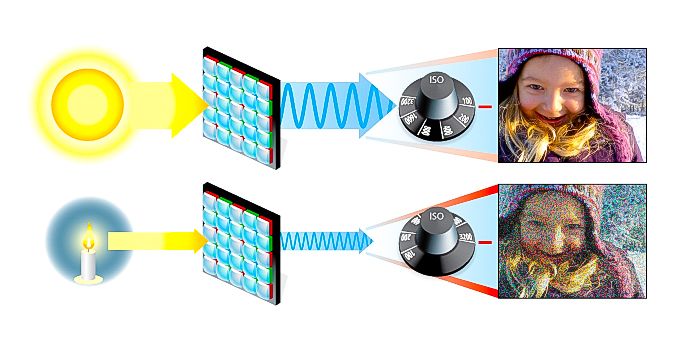

You only need to use a high ISO in low light if the camera is handheld, or the subject is moving. With static subjects, and a tripod, you can use the slowest setting – here a setting of ISO100 (Image credit: Chris George)

You only need to use a high ISO in low light if the camera is handheld, or the subject is moving. With static subjects, and a tripod, you can use the slowest setting – here a setting of ISO100 (Image credit: Chris George)

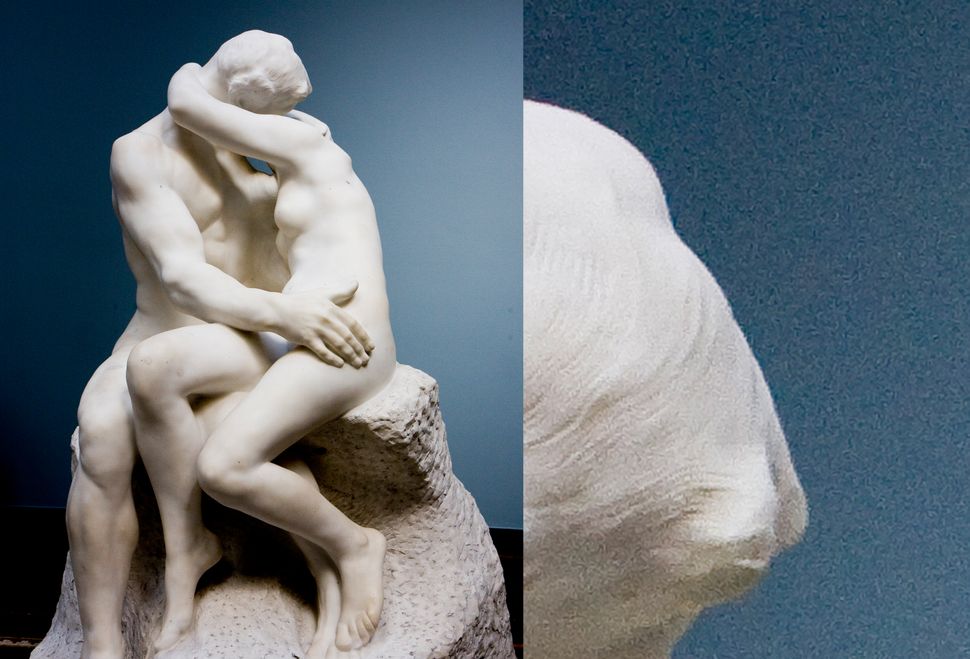

Luminance noise: Traditional monochromatic grain in darker areas (as seen in the wall behind the statue in the detail above right) is typical of luma noise

Luminance noise: Traditional monochromatic grain in darker areas (as seen in the wall behind the statue in the detail above right) is typical of luma noise

(Image credit: Shutterstock)

(Image credit: Shutterstock)